End-to-End Conceptual Guarding of Neural Architectures

News

Partners

Positions

Publications

People

Applications

Index of Benchmarks

Events

Awards

Research Project

EPSRC Reference: EP/T026995/1

Title: EnnCore: End-to-End Conceptual Guarding of Neural Architectures

Call: Security for all in an AI enabled society

Summary

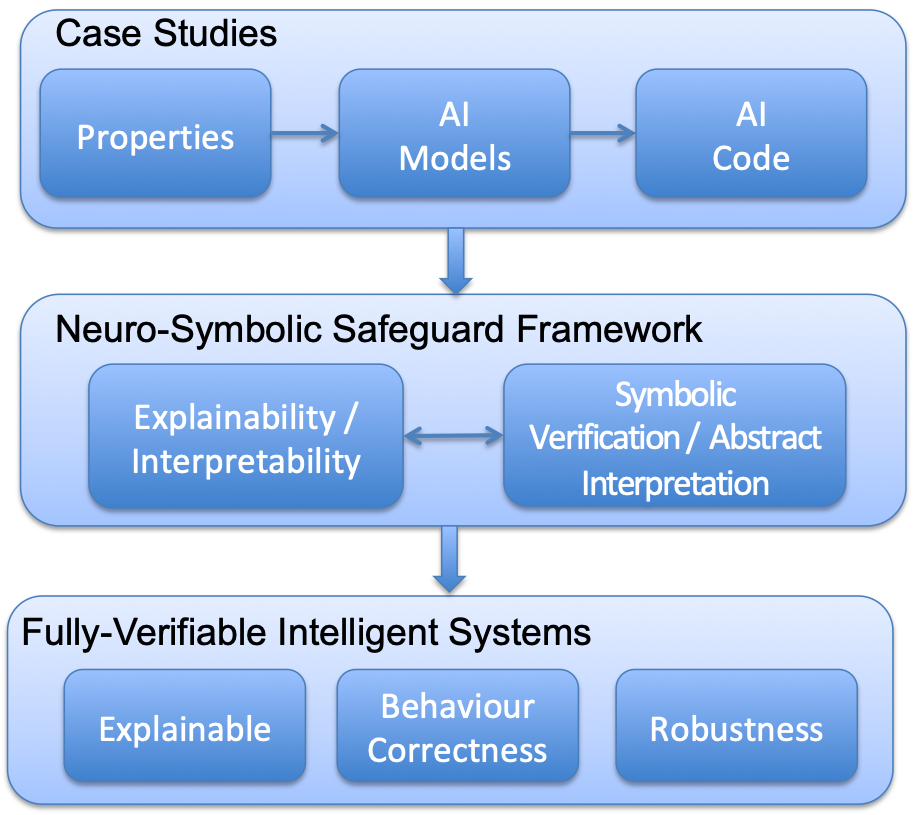

EnnCore will address a fundamental security problem in neural-based (NB) architectures, allowing system designers to specify and verify a conceptual/behavioural hardcore to the system, which can be used to safeguard NB systems against unexpected behaviour and attacks. It will pioneer the dialogue between contemporary explainable neural models and full-stack neural software verification. We will, therefore, develop methods, algorithms and tools to achieve fully-verifiable intelligent systems, which are explainable, whose correct behaviour is guaranteed, and that are robust towards attacks.

EnnCore will be validated on three diverse and high-impact application scenarios. (i) Securing an AI system for cancer diagnosis (health -- Cancer Research UK, The Christie). (ii) Ensuring ethical and legal behaviour of a conversational agent (health -- Cancer Research UK, The Christie). (iii) Securing an AI system for demand response (energy -- Urbanchain). The use cases will be co-designed and validated under real-world data conditions with the help of one clinical and one industrial partner.

News

19/02/2020: EnnCore was officially annunced at the EPSRC website.

Vision

EnnCore will address a fundamental research problem to ensure the security of neural-enabled components by taking into account its entire lifecycle from development to deployment. Solving this research problem will have a far-reaching impact on areas such as health care, ethically grounded AI and demand response, which heavily depend on secure and trusted software components to meet safety-critical requirements. Our overall research objective is to have a long-term impact on writing secure and trusted AI-based software components. We will thus contribute to a shared vision of fully-verifiable software, where underlying neural-based architectures are built with strong symbolic and mathematical guarantees.

EnnCore: proposed approach.

Objectives

EnnCore will design and validate a full-stack symbolic safeguarding system for NB architectures. This project will advance the state-of-the-art in the development of secure Deep Neural Network (DNN) models by mapping, using and extending explainability properties of existing neuro-symbolic DNN architectures (e.g., Graph Networks, Differentiable Inductive Logic Programming). We will thus safeguard them with symbolic verification, abstract interpretation and program synthesis methods. EnnCore will pioneer the multi-disciplinary dialogue between explainable DNNs and formal verification. In particular, EnnCore will deliver safeguarding for safety-critical NB architectures with the following novel properties:

(1) Full-stack symbolic software verification: we will develop the first bit-precise and scalable symbolic verification framework to reason over actual implementations of DNNs, thereby providing additional guarantees of security properties concerning the underlying hardware and software, which are routinely ignored in the existing literature.

(2) Explainability / Interpretability: EnnCore will pioneer the integration of knowledge-based and neural explainability methods to support end-users specifying security constraints and diagnosing security risks, to provide assurances about its security as NB models evolve. Particular attention will be given to the quantitative and qualitative characterization of semantic-drift phenomena in security scenarios.

(3) Scalable: we will systematically combine contemporary symbolic methods for explaining, interpreting and verifying neural representations. In particular, we will develop a neuro-symbolic safeguard framework by linking the structural knowledge-based representation elements to the attentional architecture elements, to achieve scalability and precision in an unprecedented manner. We will also develop new learning techniques for reusing information across different verification runs to reduce formulae size and consistently to improve constraint solving.

Partners

EnnCore is a joint project with the University of Manchester, University of Liverpool, UrbanChain, and Cancer Research UK.